For decades, state and local departments of transportation have collected traffic data by means of a variety of methods — including sub-surface magnetic induction loops, pneumatic hoses laid across lanes, piezoelectric sensors placed alongside roadways, and vehicle counts by human observers. These traditional traffic data collection methods, however, are limited in coverage and expensive to implement and maintain.

For decades, state and local departments of transportation have collected traffic data by means of a variety of methods — including sub-surface magnetic induction loops, pneumatic hoses laid across lanes, piezoelectric sensors placed alongside roadways, and vehicle counts by human observers. These traditional traffic data collection methods, however, are limited in coverage and expensive to implement and maintain.

In recent years, alternative sources for traffic data have evolved rapidly, for two reasons: first, the increasing demand for high quality, real-time data to enable intelligent transportation systems (ITS); second, the widespread availability of data on the location, heading, and speed of vehicles from GPS receivers embedded in them and in the smart phones of their drivers — known as crowd-sourced data. This data has greatly increased the ability of government agencies to manage traffic and of private companies to help drivers avoid it. While these developments are making access to real-time traffic information routine, systematic implementation of traffic management systems in metropolitan areas still faces obstacles — most notably, the limited market penetration of smart phones and the fragmentation of authority over roads among many public agencies.

The New Jersey statewide traffic operations center displays INRIX real-time traffic information.

Goals

Both public and private traffic information systems aim to reduce congestion, emissions, and accidents — three mutually-reinforcing goals that are advanced by the same devices and strategies. “There is a very large sweet spot here. By doing a good job in one of these areas, you end up getting a cascade of benefits that help with other goals,” says Richard Mudge, Vice President and Director at Delcan. The company, which works mostly for public sector clients, develops applications of real-time and historical traffic data for traffic management, planning, and policy. Much of that work involves data fusion from multiple sources.

Another, simpler reason for using crowd-sourced traffic data is to deploy engineering staff where it is most important, says Peter Koontz, a traffic engineer for the City of Portland, Oregon. For example, he says, it can help the city determine where the performance of traffic signals has most changed or degraded.

Additionally, crowd-sourced traffic data greatly improves emergency response. “States are finding that they are able to identify potential incidents out of the flow data and they are able to get alerted to them much faster than they were before by waiting for a report to come through 911,” says Jim Bak, senior PR and marketing manager at INRIX. “In New Jersey, they think that they are saving $100,000 per incident in delay costs as a result of having crowd-sourced traffic data.”

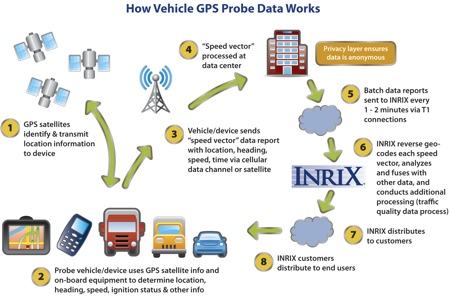

A whole array of probe (crowdsourced) data is compiled. (Source: INRIX)

Growing Use of Crowd-Sourced Data

Real-time data is essential to effectively manage traffic, which is extremely dynamic. Crowd-sourced data, also called probe data, has been looked at seriously in the United States for the last 10 or 12 years, but in the last few years its volume has increased quite dramatically, says Mudge. At this year’s annual meeting of the Intelligent Transportation Society of America (ITSA), several sessions focused on system efficiency and performance measures, both of which can leverage crowd-sourced data, says Ted Trepanier, Executive Director, Public Sector, at INRIX.

Several metropolitan areas in the United States have implemented comprehensive traffic management systems that use crowd-sourced data. According to Trepanier, one of the best examples is the Seattle metro area, where not only the state’s department of transportation incorporates a complete arsenal of traffic management techniques, but also “there is cooperation between the many local agencies to communicate and manage traffic in coordination.” Other examples he cites are the Maryland Highways Agency, the Metropolitan Transportation Commission (MTC) in the San Francisco Bay area, SanDag in San Diego, the Caltrans district in Los Angeles, and the Minnesota Department of Transportation.

The two largest companies that make use of crowd-sourced traffic data are Google and INRIX. According to Matt Ginsberg, co-founder and CEO of On Time Systems, Inc., Google’s data is very good but the company may soon face legal challenges as to its right to collect it from users of cell phones that run its Android operating system. Google wants to have this data but does not want to resell it, while INRIX is very effective at reselling it, he points out. Other players include Waze and Beat the Traffic.

Four Layers

Today, most traffic information systems rely on crowd-sourced data to supplement data from traditional traffic sensors. Yet, most routing systems in use do not take traffic fully into account, says Alexandre M. Bayen, an associate professor of systems engineering in the department of Electrical Engineering and Computer Sciences at the University of California, Berkeley, and principal investigator for its Mobile Millennium (MM) project.

Some take historical traffic data into account and are therefore able to tell you which route is generally better at a certain time of day and day of the week. A few traffic systems are even more sophisticated and take current traffic conditions into account; however, he explains, they assume that traffic will not change while you are en route, which is often not true. “The fourth layer would be a guiding system that is based on forecast, but today there are very few systems that are able to forecast traffic efficiently enough to provide routing based on anticipation of what traffic will be. It is just a very difficult problem.”

Analytics

In addition to plentiful, accurate, and timely data, effective traffic management requires sophisticated analytical software to interpret it. “You don’t want to mistake a taxi cab or a delivery truck stopping to do a pick-up or a drop off to be idle in traffic,” says Bak. Similarly, he points out, it is important to distinguish between vehicles stopped at a traffic light and a traffic jam.

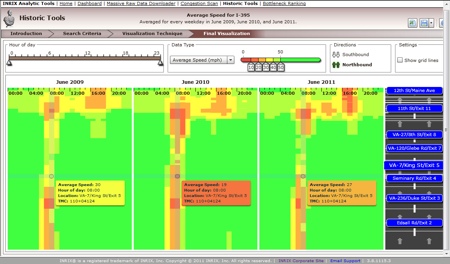

The software that INRIX uses to analyze its data is able to predict the likely impact on traffic of various incidents on a stretch of road on the basis of similar past situations and to adjust travel estimates accordingly. It also does that any time there is an event, such as a baseball game or a rock concert, attended by 5,000 people or more in one of the markets that it serves. “When there is a 7PM baseball game at the tail end of the rush hour, traffic is going to be very different than on a day when there is not a game.” The company’s algorithms learn patterns over time and factor them into traffic forecast. “Believe it or not, when there is no school in session, traffic actually gets better by around ten percent around the country because there are fewer people driving at the same time every day and they don’t pick up and drop off their kids from school,” says Bak.

Graphical congestion scan shows the difference over three years at a glance for I-395NB in Washington, D.C. (Source: INRIX Analytics)

Penetration

For traffic systems based on crowd-sourced data to work, what percentage of drivers must have GPS-enabled smart phones or car navigation systems? “What we’ve shown is that, for freeways, if you have a two percent penetration rate you can provide very accurate speed information,” says Bayen. “For arterials — which have traffic lights, stop signs, etc. — the percentage required is slightly higher.”

The penetration rate needed to reliably monitor traffic flow, Ginsberg says, depends simply on how many vehicles have to be involved in a phenomenon for it to be worth reporting. “Your average traffic jam, even if it is a short but intense slowdown, probably involves at least 100 cars. If you have one percent penetration you probably have somebody who is essentially stationary in this short traffic jam and that puts you in a position to report it. If you look at arterials, a slowdown probably is going to involve 20 to 50 cars, so now you are going to see the numbers go up to two to five percent.” Residential streets, where a slowdown because of construction or an accident can easily involve as few as ten cars, requires at least a 10 percent penetration — “which is why it is so hard to get accurate information on really small roads.”

Dispersing Traffic

One concern shared by traffic experts is that, as traffic information systems become ubiquitous, giving a large percentage of drivers the same routing guidance can result in simply moving traffic jams from one place to another. That’s why it’s important to be able to be able to update vehicle navigation systems with very little latency. That, in turn, requires two-way connectivity, fast communications, and processing that is both fast and smart. The rising percentage of vehicles with car “infotainment” systems, which use an Internet protocol-based connection, addresses the first two requirements, while steadily improving analytic software addresses the third one. Routing engines are evolving rapidly and will soon be able to disperse vehicles with greater balance across the road network, says Bak. Crowd-sourced data from private vehicles, he points out, will help this process further by providing information on traffic flow on more streets, thereby giving the system more routing options, including a mix of highways, arterials, and neighborhood streets.

“I would love to have that problem,” says Ginsberg, “because it would mean that we were hugely successful.” For the problem to arise, penetration would probably have to reach at least 20 percent, he says. “At that point, it is very easy to anticipate what is going on.”

However, according to Bayen, significantly reducing congestion, rather than shifting it geographically, requires government action, because today all the routers are “selfish” — that is, they give each driver what is best for them, regardless of the larger impact. As a result, he explains, if everybody is using the same router or a similar router, everybody will try to take the same route and will clog it, making everyone worse off. To avoid this, an authority would have to spread people out so that they would share the burden of the congestion. “That might imply that people are led into routes that are not optimal for them but are optimal for the whole system,” says Bayen. “The technology is there and we have done a lot of work at Berkeley to do this. However, I think that we are far from an institutional framework in which that could be implemented, because it would assume that everybody was equipped with some device that can give you that information and that a very high percentage of people would comply with the guidance that they receive. That might work in a totalitarian state, but to make it work in a country like the United States you might have to give people incentives.”

Bayen also points out that many drivers may be more interested in finding the most reliable route then the fastest or shortest one. “If you have a system that tells you that you can go from A to B within 53 minutes, but you have no guarantee of how reliable that is, and you have another system that tells you that you can go from A to B in 58 minutes and with a 90 percent chance that you will be within three minutes of your estimated arrival time, the latter is probably much better.”

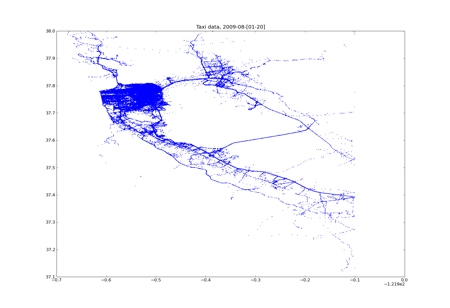

Mobile Millennium probe data collected from a fleet of 500 vehicles in the Bay Area sending their data every minute.

Remaining Obstacles

What are the remaining obstacles to implementing efficient, real-time routing and traffic management systems in all metropolitan areas? “Honestly, none,” says Trepanier. “Companies like INRIX have these systems available today and our mobile application makes this information available directly to drivers. Data services, including analytics, provide a deeper view of real-time system conditions and performance measures for public transportation agencies.” However, market penetration is still a challenge for INRIX. Additionally, Ginsberg points out, many drivers are reluctant to turn on a navigation application on their smart phone because GPS devices are very power hungry and drain phone batteries quickly.

“The biggest problem, I think, is institutional,” says Mudge. “It is rare to find any place where a single entity has systematic control. The state DOT may be in charge of certain roads, a city may be in charge of another set, the county in charge of another. The system is not managed from an integrated point of view. If you go out and you coordinate traffic signals on one arterial, that’s nice, but unless you coordinate traffic signals on, say, 40 major arterials in a metropolitan area, you are just going to shift problems from one place to another. The 40 major arterials may be controlled by 50 different cities and they may not see a reason to work together.”

Mudge also thinks that state and local governments should analyze the economic and social benefits of coordinating traffic management on a large scale. “An awful lot of the decision making is done on a project-by-project basis and doing that is fine, you can get good things done, but you also miss the productivity benefits that you gain from coordination. In much of transportation, the whole is greater than the sum of the parts. The classic example of that is the interstate highway system.”

COMPANY PROFILES

INRIX (http://www.inrix.com)

INRIX, the largest provider of traffic data in the world, monitors real-time traffic flow on more than a quarter million miles of roads in the United States and provides that information to several automakers, GPS receiver manufacturers, and telecommunications companies, including AT&T, BMW, Ford, Garmin, Mercedes-Benz, TomTom, Navigon, and Sprint. “First and foremost, we are trying to improve urban mobility for the world’s four billion drivers,” says Bak. “We are trying to do that in ways that reduce the economic, environmental, and individual toll of traffic congestion.”

INRIX aggregates data from hundreds of public and private sources, analyzes them, and turns them into traffic information. In addition to data from traditional road sensors — which are mostly on highways and interstates — it also collects reports on accidents, road closures, construction, and other incidents that impact traffic, as well as traffic flow data from a crowd-sourced network of 100 million vehicles and devices in 30 countries. Most of its employees are tasked with creating algorithms and analytics to make sense of all the data that the company gathers.

INRIX began, seven years ago, by collecting data from long-haul freight trucks, local delivery trucks, taxi cabs, airport shuttles, and so on, equipped with GPS receivers and two-way connectivity. Fleet vehicles are still a major component of its system. “We provide the traffic information that helps them do next-day planning and plan their routes for all of their vehicles. In return, they agree to give us data that we can use to improve our traffic service,” says Bak. “We love fleet vehicles, because those guys are on the roads up to eight hours a day, providing data to us for every minute of every hour that they are on the roads.”

When it started, the company was able to send traffic data to passenger cars, but not to collect data from them in real time. Now, however, a growing percentage of passenger cars are sold with systems that provide two-way connectivity — such as Ford SYNC, Toyota Entune, and Audi Connect — which allow INRIX to collect real-time data that greatly improves its ability to understand changing traffic conditions. “This data is great,” says Bak, “because consumers go deep into the neighborhoods, where the fleets don’t go.” However, he points out, the downside of relying on consumer devices is that they provide data only when a navigation application is running. Therefore, companies that rely only on crowd-sourcing from consumer devices have much smaller datasets. “The more data you have, the more the power of your network grows, and the more able you are, if you have good analytics, to do really precise and accurate things with the data.”

Currently, about 60 percent of INRIX’ travel data comes from commercial fleet vehicles and about 40 percent from consumer vehicles and mobile devices, but the latter share continues to grow, Bak says. Today, he points out, only about 10 percent of all new Ford vehicles have SYNC, but Ford predicts that this number will grow to 50 percent within the next five years.

Mobile Millennium (http://traffic.berkeley.edu)

MM — a public-private research partnership between the University of California at Berkeley, the Nokia Research Center, and NAVTEQ, with sponsorship from the California Department of Transportation — was one of the very first systems that was built using GPS-enabled smart phone crowd-sourced data, according to Bayen. Beginning in November 2008, it conducted a one-year pilot program during which more than 5,000 users downloaded its traffic software onto their phones. MM aims to prototype traffic-monitoring systems that use crowd-sourced data, Bayen explains. “Our goal is to study how to integrate crowd-sourced data with all kinds of data, so as to provide the next generation traffic information systems.”

MM is a traffic fusion engine that can merge into traffic estimates all sorts of traffic data — including data from fleet vehicles, loop detectors, radars, and readers of RFID tags used to pay tolls without stopping. “For California,” Bayen says, “we get data from thousands of static sensors, we get millions of GPS points every day, and we have data from hundreds of radars. Obviously, the novelty of the system is the use of data from GPS-enabled smart phones.”

On Time Systems (http://www.otsys.com)

OTS collects real-time data on traffic lights from cities and on vehicle location and speed from drivers, via a smart phone application it has developed. In exchange for drivers’ data, it gives them routes designed to avoid red lights. In Eugene, Oregon, where he lives, the application saves Ginsberg about five percent in fuel consumption, he says. The problem, he points out, is that people will not use this application to get to and from work, because they think that they already know the best route. “From our perspective, the trick is finding a way to convince people to give us more of their data so that we can do a better job crowd sourcing.”